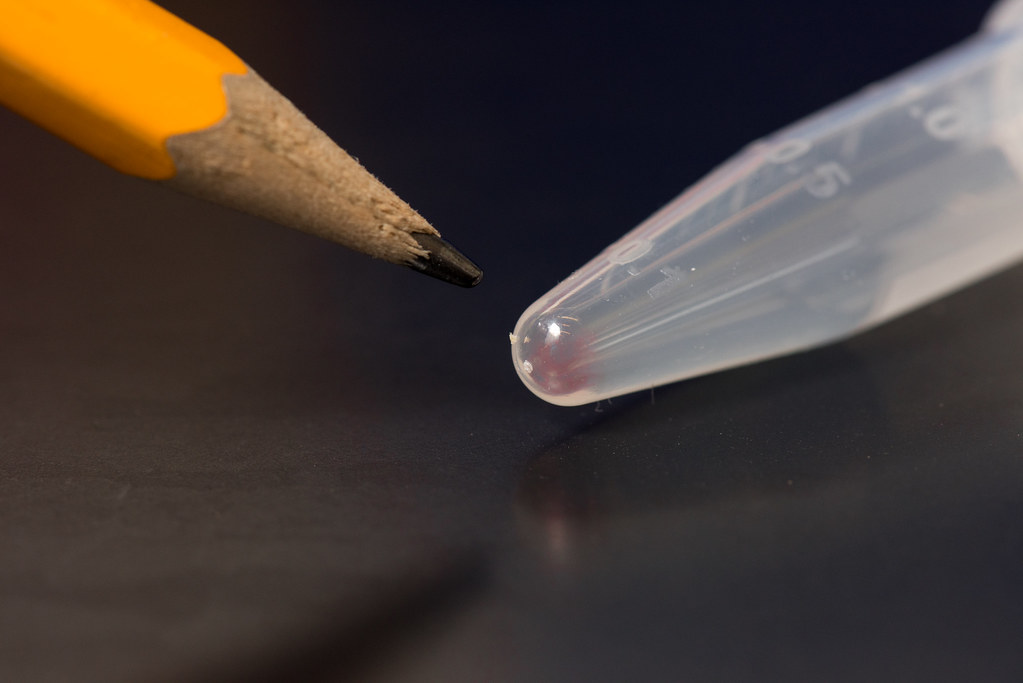

I recently stumbled upon the image on the right, which distills the changes in data storage in the past 40-plus years. Perhaps even more amazing to consider is that the future of data storage could become even smaller. The genetic material that stores all the information required to build a person or a pear or a penguin may be the key to creating even smaller data storage that never reaches obsolescence.

I recently stumbled upon the image on the right, which distills the changes in data storage in the past 40-plus years. Perhaps even more amazing to consider is that the future of data storage could become even smaller. The genetic material that stores all the information required to build a person or a pear or a penguin may be the key to creating even smaller data storage that never reaches obsolescence.Our genome is often compared to a computer, where DNA is the code. In fact, DNA is a proven data storage system with billions of years of reliable use. While your old floppy disks may now be unreadable, the tools required to read and copy DNA are present in every genome, making it unlikely that we would lose the ability to decode DNA. These advantages led scientists to ask: could DNA also be used to store other types of data? Perhaps the information that would normally be encoded by 0's and 1's in your hard drive could be stored in sequences based on ACGT's.

The first publication to propose that DNA could be used for purposes other than building an organism comes in 1999 from Bancroft and colleagues in the journal Science. They suggest that genomic steganography could be a method for storing coded messages in DNA for use in espionage. Using a simple substitution cipher where each codon equals an alphanumeric value, the researchers synthesized a DNA sequence to encode the message "June 6 invasion: Normandy". The message was flanked by sequences to allow the recipient to decode the message. The final sequence of just 109 nucleotides of DNA was hidden within denatured human DNA and, just like the predecessor microdots used in espionage, embedded on top of a period in a typewritten message.

Subsequent work from Bancroft's group and others in the early 00's suggested that DNA could help to address the need for increasing data storage. Computer scientists estimate that by 2020, there will be 4.4 x 1019 bytes (44 zettabytes) of digital data; to give you a sense of scale, 1 ZB would be about 152 million years of high resolution video. Even with the advances in storage potential, storing just 1 ZB requires more than 1000 kilograms of the cobalt alloy used to make hard drives. In contrast, 1 gram of DNA could store 4.6 x 1018 bytes.

Subsequent work from Bancroft's group and others in the early 00's suggested that DNA could help to address the need for increasing data storage. Computer scientists estimate that by 2020, there will be 4.4 x 1019 bytes (44 zettabytes) of digital data; to give you a sense of scale, 1 ZB would be about 152 million years of high resolution video. Even with the advances in storage potential, storing just 1 ZB requires more than 1000 kilograms of the cobalt alloy used to make hard drives. In contrast, 1 gram of DNA could store 4.6 x 1018 bytes. Early publications were proof of principal experiments that aimed to generate increasingly bigger data files encoded in DNA. The general approach, outlined above, convers a digital file to binary and to DNA. The beginnings were admittedly small, just as scientists had to sequence the genome of E. coli before they could complete the human genome. One problem is that DNA sequencing technology is improving at much faster rates than DNA synthesis techniques. Essentially, you could read the data you stored faster and cheaper than you could write it. Creating long accurate strands of DNA had technical and financial limitations. To circumvent this problem, George Church's lab used multiple copies of short DNA sequences to encode an entire book (53,246 words), 11 JPG images, and a JavaScript program. The paper, published in Science in 2012, also describes the recovery and reassembly process. The following year, a Nature paper from Ewan Birney's lab at the European Bioinformatics Institute reported a similar approach that increased the file size and decreased decoding errors. The final DNA file consisted of 739 KB of information, including text, pictures, videos, and audio files; they also added a PDF of the classic Nature paper from Watson and Crick describing the structure of DNA.

In July 2016, researchers from the University of Washington collaborated with Microsoft to push the limits of DNA storage again (coverage in The Verge). Their storage reached 200 MB and included copies of the Universal Declaration of Human Rights, the top 100 books from Project Gutenberg, and the Crop Trust seed database; for fun, they encoded a video from the band OK Go for the song "This Too Shall Pass".

Most recently, a paper in Science from Yaniv Erlich and Dina Zielinski, who are working at the intersection of molecular biology and computer science, details a new storage architecture for more efficient DNA storage. They adapted fountain coding, which is currently used by streaming services like Netflix and Spotify to eliminate gaps in playback. The method greatly improved the storage density, getting closer to the theoretical limit for DNA storage (1.83 bits per nucleotide). Their DNA storage sample included the movie The Arrival of a Train, an entire computer operating system, a computer virus, and a Amazon gift card (which was quickly decoded by one of the researchers' Twitter followers). While the size of the data was smaller than previous attempts (only 2.2 MB), the method greatly improved data density and readability. One problem with previous storage methods is that reading the DNA leads to loss of the original sample. While it is easy to amplify DNA, it can sometimes introduce mistakes. Erlich and Zielinski's fountain technique permitted error-free amplification even after 10 complete reads. Their work achieved a density of 2.15 x 1018 bytes, which would allow storage of all the world's data in the trunk of a car.

Another stumbling block was that DNA was writable, but not re-writable, which limit the applications to archival data storage. Two recent papers (in Nature Communications and PNAS) report on a method that allows re-writing of DNA (bringing us from 8 track to cassette tapes) as well as reading from any point in the sample, rather than from a set starting spot (bringing us from cassette to CD).

|

| 1 gram of DNA can store 4.5 x 1018 byte |

While there has been tremendous progress in increasing the amount and density of data storage, the major roadblock continues to be the amount of time it takes to encode and decode data in DNA. Another place where inorganic data storage beat carbon-based products is in the cost, especially of synthesizing DNA. In the most recent paper, the cost was $3,500/MB, while the 2012 paper $12,400/MB.

Despite these limitations, biologists are teaming up with computer scientists to explore the future of DNA data storage. This is largely driven by the need to store increasing amounts of digital data with decreasing resources. Estimates indicate that by 2040 global memory demand (3 x 1024 bytes) will exceed the supply of silicon necessary to build traditional data storage devices.

Obsolescence is another shortcoming of current storage methods. Just as it has become difficult to play your cassette tape collection (much to my chagrin), your old floppies and ZIP disks are not readable either. Scientists conjecture that because DNA is the basis for life on Earth, we will always have methods for DNA sequencing. This gives DNA a huge advantage for long-term archival storage. Luckily, DNA also has great fidelity over the long term. Scientists are increasingly able to recover readable sequences from ancient samples of DNA with the best results coming from samples stored at low temperature. Thus, you could imagine a long-term storage system, like a secure server in a remote tundra, where the DNA back up disk to re-start civilization would be stable and safe.

This isn't completely crazy. The Svalbard Global Seed Vault is a huge storage site in the frozen tundra of Norway where scientists and governments are making contributions of plant seeds. The idea is to keep a stock of the original seed in case of the collapse of civilization. I am sure we could rent a shoe box-sized space there for storing all the relevant files from humankind (that means there probably won't be room for cat videos). It is certain that resource limitations will continue to make digital DNA storage, borne of a thought experiment over beer, not just a reality but a necessity.

References

Scientific American, Tech Turns to Biology as Data Storage Needs Explode

George Church interview in Popular Science

Ed Yong covered the DNA fountain technique in The Atlantic